Innovative sign language recognition and translation technology

To create such advanced and complex technology, in-depth knowledge and expertise of a range of fields is required.

Human languages are astoundingly complex and diverse. We express ourselves in infinite ways, such as through speaking or signing. Unlike spoken languages, signed languages are perceived visually. The expressive and visual nature of sign languages allows its users to process elements simultaneously. At the same time, it also creates a unique set of challenges for sign language technology.

SignAll’s success can be attributed to many factors, including high levels of knowledge in the following areas:

The space and location used by the signer are part of the non-manual markers of sign language.

The shape, orientation, movement, and location of the hands are some of the key parameters of sign language.

Other non-manual markers include the eyes, mouth, brows, or head movements such as nodding or shaking.

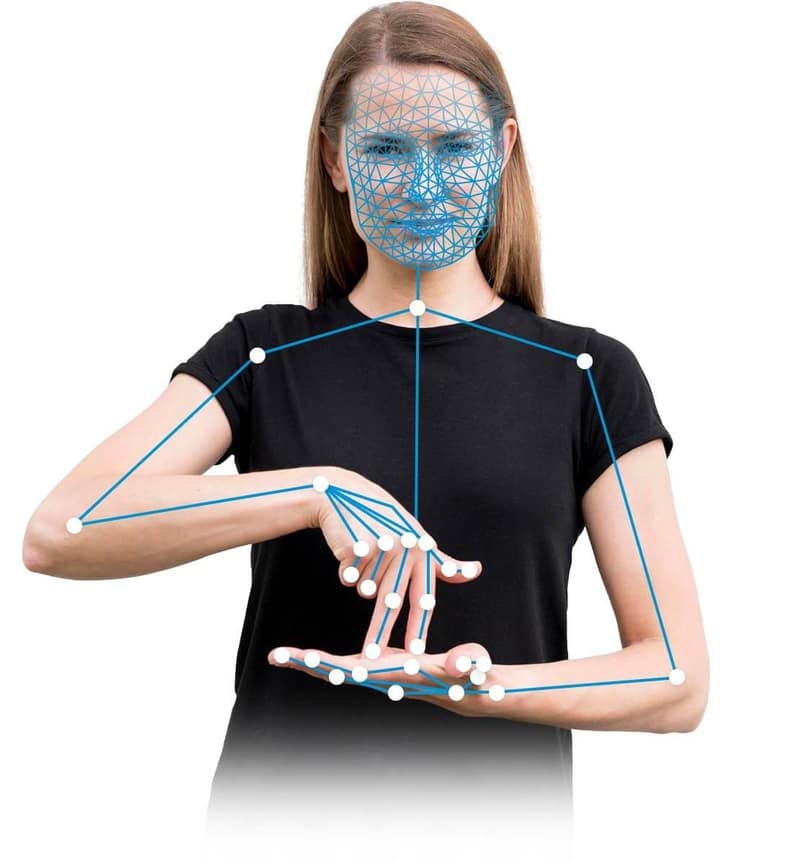

SignAll's core technology uses visual input from the external world and converts this information into data which can be processed by the computer.

Capturing these components is the first step towards effective sign language translation, but a much more complex process is required to turn this data into meaningful information.

Over the years, there have been many attempts to use technology to translate sign language, yet unfortunately these all have failed to consider all of the important factors that make up sign language.

The combined expertise of developers, researchers, linguists, and sign language experts has allowed SignAll to employ technology to capture and analyze the key elements of sign language.

Depth cameras create point cloud images, and an additional camera is used for color detection. The images are then merged together to map the visual and spatial elements of the signer.

Images represent signs which may have multiple meanings, similar forms, or have different parts of speech (e.g. a noun vs. a verb). The visually recognized data is referenced against our database to retrieve all the possible sign IDs.

In some cases, there could be over 100 combinations of the sign IDs, as they can also represent different syntactic roles within the sentences. Using a variety of machine translation approaches, the probability of different combinations is calculated, and the top 3 most likely options are identified.

The top 3 results are displayed on the screen for the user. The user then selects the translation which best matches their sign language.

The selected sentence is displayed on the screen for the hearing user to read. It can also be voiced using text-to-speech technology.